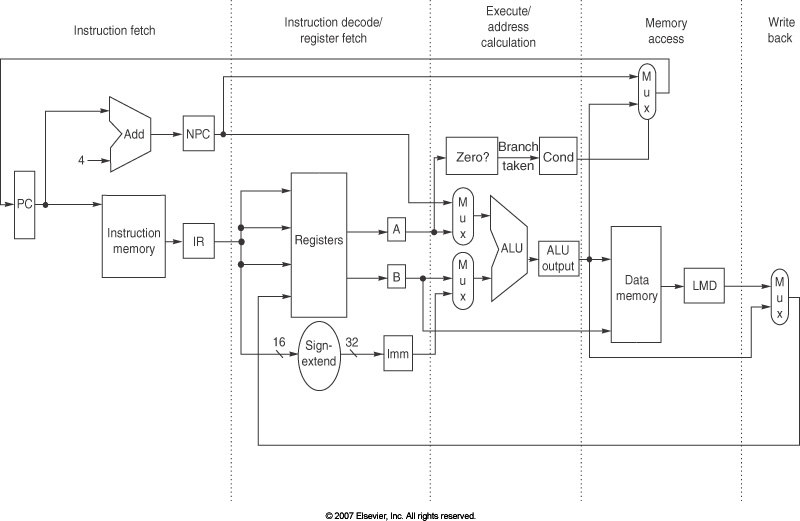

I also did not explain the difference between

the rs, rt, and rd

register specifications in an instruction very well. The distinction

is architecture-specific, of course, and not the most important factor

in understanding instruction execution, but we should get it right.

I also did not explain the difference between

the rs, rt, and rd

register specifications in an instruction very well. The distinction

is architecture-specific, of course, and not the most important factor

in understanding instruction execution, but we should get it right.