(thanks to Chishiro for spotting those excellent diagrams on Wikipedia.)

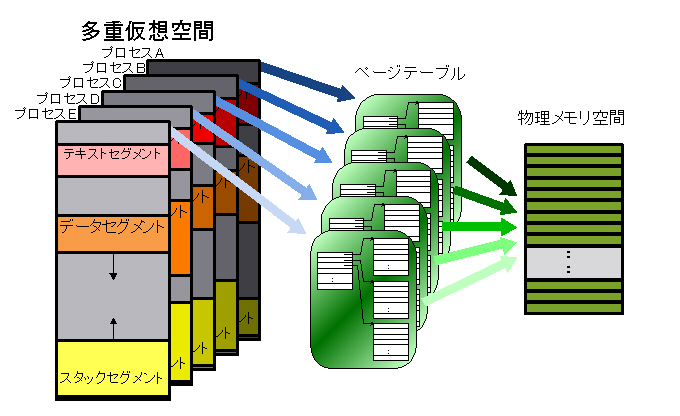

The simplest approach would be a large, flat page table with one entry

per page. The entries are known as page table entries, or

PTEs. However, this approach results in a page table that is

too large to fit inside the MMU itself, meaning that it has to be in

memory. In fact, for a 4GB address space, with 32-bit PTEs and 4KB

pages, the page table alone is 4MB! That's big when you consider that

there might be a hundred

The solution is multi-level page tables. As the size of the

The translation from virtual to physical address must be fast.

This fact argues for as much of the translation as possible to be done

in hardware, but the tradeoff is more complex hardware, and more

expensive

The reference trace is the way we describe what memory has recently been used. It is the total history of the system's accesses to memory. The reference trace is an important theoretical concept, but we can't track it exactly in the real world, so various algorithms for keeping approximate track have been developed.

Paging is the

When an application attempts to reference a memory address, and the

address is not part of the

Put in skis, yacht, bowling ball, baseball here... demonstrate OPT pretty easily.

The optimal algorithm (known as OPT or the clairvoyant algorithm) is known: throw out the page that will not be reused for the long time in the future. Unfortunately, it's impossible to implement, since we don't know the exact future reference trace until we get there!

There are many algorithms for doing

When a clock

Because NRU does not distinguish among the pages in one of the four classes, it often makes poor decisions about what pages to page out.

According to one source, Linux, FreeBSD and Solaris may all use a very

heavily-modified form of LRU. (I suspect this

I believe early versions of BSD used clock; I'm not sure if they still do.

Most systems include at least a simple way to set a maximum upper

bound on the size of

Note that while the other algorithms conceivably work well when

treating all

Reviewing:

(Images from O'Reilly's book on Linux device drivers, and from lvsp.org.)

We don't have time to go into the details right now, but you should be

aware that doing the page tables for a 64-bit

Linux uses a three-level page table system. Each level supports 512 entries: "With Andi's patch, the x86-64 architecture implements a 512-entry PML4 directory, 512-entry PGD, 512-entry PMD, and 512-entry PTE. After various deductions, that is sufficient to implement a 128TB address space, which should last for a little while," says Linux Weekly News.

#define IA64_MAX_PHYS_BITS 50 /* max. number of physical address bits (architected) */ ... /* * Definitions for fourth level: */ #define PTRS_PER_PTE (__IA64_UL(1) << (PTRS_PER_PTD_SHIFT))

In modern systems, multiple page files are usually supported, and can

often be added dynamically. See the